A Comprehensive Guide to Technical SEO

SEO beginners will hear the same refrain over and over again: focus on the content. The story goes that the core of SEO is creating high-quality, uniquely valuable content, then getting other websites to link to it.

While it’s true that content is essential to SEO, it’s not the be all and end all – you need to focus on technical SEO, too.

In this comprehensive guide, we’ll explain exactly what technical SEO is, and steps you can take right now to improve your site’s SEO. The tools and tactics we present here are inexpensive or free to implement – the biggest cost is the labour.

By the end, you’ll understand exactly why technical SEO is worth the effort, and how each change will improve your ranking on SERPs.

Let’s get started.

What is Technical SEO?

Technical SEO is a strategy composed of tactics used to help Google (and other search engines) crawl and index your pages. When your pages aren’t crawled or indexed, they won’t appear in search engine results.

Crawling and Indexing

Crawling and indexing are two distinct, but intimately linked activities conducted by search engines. These engines use bots to “crawl” the Web, following links from a given page to see how it connects to:

- Other pages on the website (internal links)

- Other pages around the Web (external links)

Indexing is an action search engines can take after they crawl a page, adding the page to their index (and making the page searchable). Not all pages that are crawled will be indexed, but all pages that have been indexed were crawled first.

Obviously, you want your pages popping up on SERPs, and for this to occur, any page that you want to appear must have been crawled and indexed first.

99% Invisible

At this point, some of your eyes might have glazed over – doesn’t focusing on the behaviour of robots detract from the user experience?

No. In fact, it’s the opposite.

I stole the title of this section from a podcast, “99% Invisible” – named so because good design goes largely unnoticed. It’s bad design that gets noticed, because, in some way, it obstructs you.

Search engines want your good design to go unnoticed – in other words, they want your users to have an unobstructed experience. When a web crawler (one term for the robots they use) has a bad experience, it’s likely that your users will have a bad experience too. Almost all of the tips we give here, from how to design your site structure to choosing the right URLs, improve user experience as well as crawling/indexing.

Tools

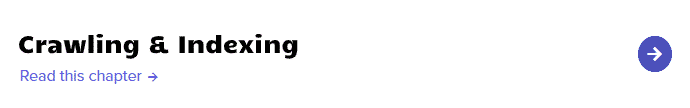

Throughout this article, I’ll make reference to a couple of tools you can use to check the health of your technical SEO.

The most important of these tools is Google Search Console (GSC). GSC is free-to-use, and it provides you with many insights about things like:

- How Google sees your website (which pages have been crawled/indexed, etc.)

- Which keywords are attracting users to your site, impressions, and your rank

- Alerts on site issues

GSC also allows you to interact with Google by submitting sitemaps (more on that later) and reporting when issues on your site have been fixed.

There are a number of third-party tools that can help you crawl your own website and detect issues. Screaming Frog is popular in our office for technical SEO work, but there are a slew of other tools you can get from Moz, Ahrefs, and others – these tools are more expensive (though, in some ways, more comprehensive) than Screaming Frog.

Looking for an inexpensive way to crawl your site? You could always try Xenu’s Link Sleuth for a free option – it hasn’t been updated since 2010, and it doesn’t have a lot of features, but it’s great if you just want to find broken links.

Don’t be too intimidated if you don’t quite know what these tools do or why you’d want to use them – all will be explained. Let’s start by helping users (and bots) navigate your website.

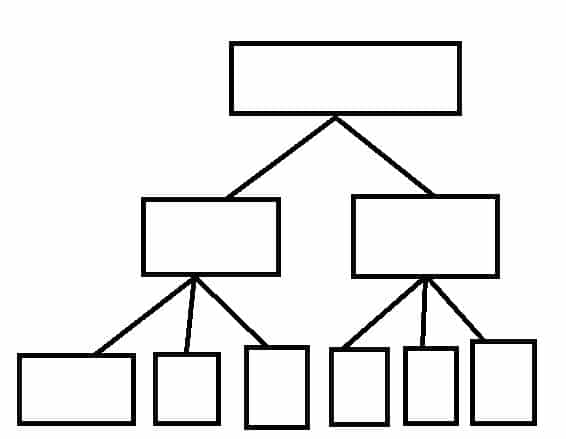

Your Website's Structure

Imagine you’re in a mall, looking for the food court. You check the map of the mall, and you see that in order to get to the food court, you’ve got to go through a Walmart, which is directly connected to a Best Buy, which is subsequently connected to a Bed, Bath, and Beyond. There is no other path to the food court – you must go through all three stores first.

I don’t know about you, but I’d about-face and leave that mall immediately.

That’s what a bad sitemap looks like. Your pages need to be organized in an efficient hierarchy.

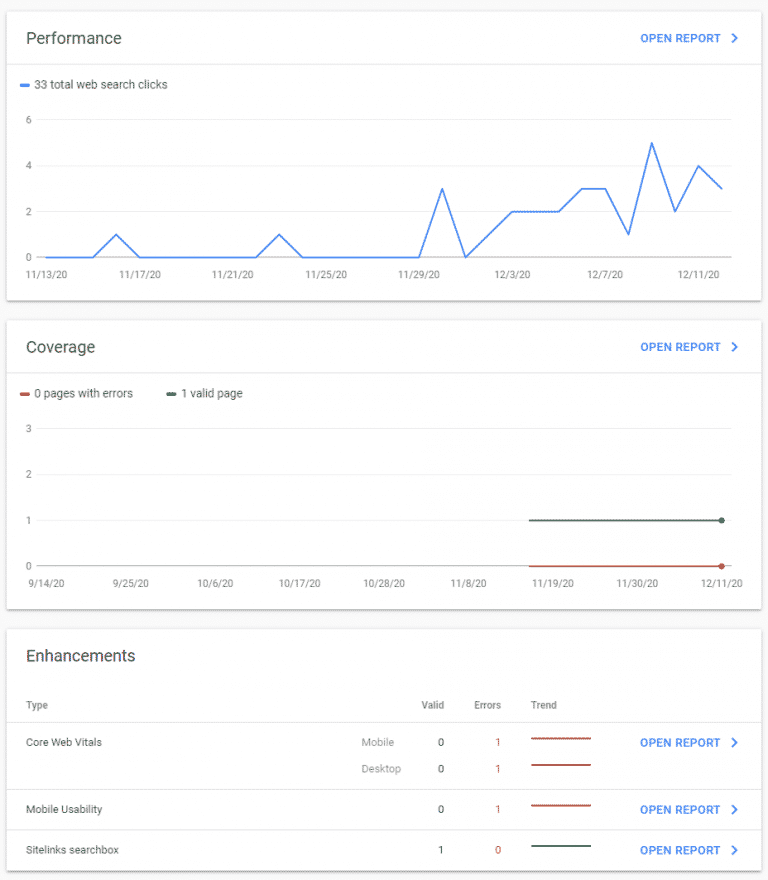

Optimizing Website Structure

From your website’s homepage, it should take 3 clicks, at most, to reach any other page on your website.

“But wait!”, I hear you saying, “I have thousands of pages! How is that possible?”

It’s all in your site’s architecture, my friend.

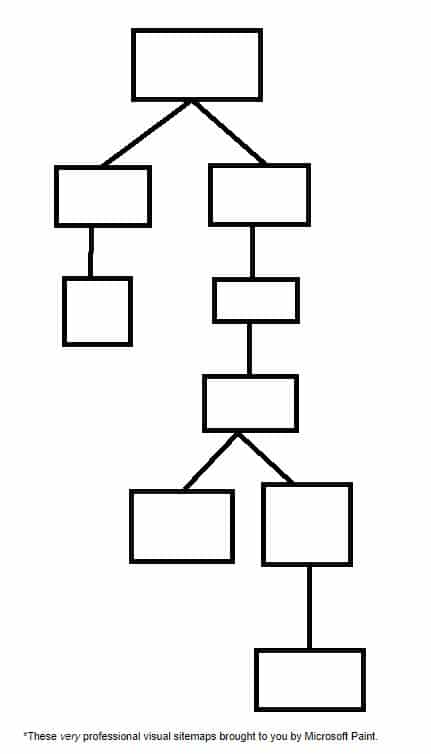

Let’s look at this in two ways: mathematically and visually.

On the mathematical side of things, your homepage is “click 0”. Imagine you have 10 links going from your homepage – “click 1” gets you to 10 new pages. From each of those pages, you might have another 10 links – “click 2” gets you to 100 unique pages. From each of those pages, another 10 links, and suddenly your users can access 1000 pages in 3 clicks.

It’s that simple. Now imagine you have more links on any one of those pages – you can easily multiply the number of pages that are accessible within 3 clicks. This makes your website easier to navigate for both users and bots.

Now for the visual example. Compare this:

To This:

It’s pretty easy to see which one is going to be easier to navigate. Expand that to thousands of pages and you can see why it’s important to have a shallow, 3 clicks or less hierarchy.

Obviously, the examples that we’ve talked about here are highly idealized – it’s unlikely that every page will have exactly 10 links from it – but try to get your website as close to this ideal form as possible.

URL Structure

One way we can go about approaching this idealized structure is by being mindful about how the URLs of our sites are built. There are three core things to keep in mind with URL structure:

- One site is easier to rank than multiple sites

- Subfolders are preferred to subdomains (mysite.com/toronto over toronto.mysite.com)

- Multiple subfolders are preferred over long, hyphenated URLs (mysite.com/toronto/seo/top-50-tips instead of mysite.com/toronto-seo-top-50-tips)

By consistently formatting your URLs in this way you’re, by necessity, keeping your site in line with optimal structuring – note that in the example given, it takes 3 clicks to get to the article, and it’s easy for the user (and bots) to navigate their way back from whence they came.

Breadcrumb Navigation

Hansel and Gretel’s use of breadcrumbs was ill-fated – fortunately, there are no digital birds to devour the breadcrumbs you leave on your website.

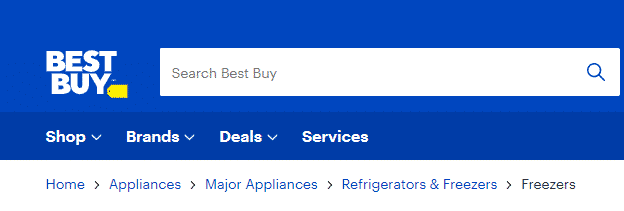

Breadcrumb navigation allows users to find their way home by following the breadcrumbs. It’s often used by large e-commerce sites or sites with so much content that it’s nearly impossible to fit the idealized 3 click structure we’ve described. Breadcrumb navigation looks like this

Homepage > Page clicked from homepage > Page clicked from second page … > Page you’re on.

Here’s an example from Best Buy:

I can immediately tell what page I’m on (freezers), and how I got there, so it’s easy to go back if I don’t find what I’m looking for.

You should only implement breadcrumb navigation if it makes sense for your website. Most restaurants and retailers with only a few different products won’t benefit from this type of navigation – it’s best for navigating when there are dozens (or hundreds) of different category pages, each with different products.

Web Page Management

Now that you understand how your site should be structured, it’s time to look at the building blocks of that structure – your web pages.

Unique Content

Every page that you want indexed by search engines should be unique and useful to users. In the next section, we’ll talk about how to stop search engines from crawling and indexing pages that you don’t want them to. For now, let’s learn how to choose URLs, and how to handle pages that aren’t unique and/or useful.

Choosing URLs

We’ve already discussed how URLs should be structured – use those subfolders, people! When it comes to choosing URLs, keep it simple – the end of your URL should almost always be the page’s title.

Let’s look at the example from above: the title of mysite.com/toronto/seo/top-50-tips should almost certainly be “Top 50 SEO Tips”, “Top 50 SEO Tips for Toronto”, or some such similar thing.

This does a couple of things: it improves user experience by keeping things consistent, and it makes your life easier when you’re trying to troubleshoot errors, redirect pages, and do other technical SEO work.

Handling Duplicate Pages

Things are going to be more technical from here on out – stick with it. Trust me, it’s worth it.

You may encounter pages on your website that are essential to a user’s experience, but not unique. This occurs, for example, when you have a product available in multiple colours.

While you may need a unique URL for all of those different colours, a Google user only needs one URL – from there, they can simply click the different colours to see what they like.

All of the text describing the product is likely to be the same. The price is likely to be the same. In these ways, the separate pages are not unique – and search engines frown on what they see as duplicate content.

Enter the canonical tag.

<link rel=“canonical” href=“thecanonicalpage.com”>

What this tag does is tell search engines “This page is not unique. The content on this page is a duplicate of the content on the canonical page. When crawling, indexing, and displaying search results, use the canonical page”.

Canonical Tag Best Practices

There are a few things to keep in mind when using canonical tags – some of these may seem obvious, but they help to illustrate the tag’s function.

First, you want to avoid mixed signals. When Page 1 says “Page 2 is canon” and Page 2 says “Page 1 is canon”, search engines won’t know which one is actually canon. Pick a page as your canonical page, and stick with it.

Second, you should know that you can self canonicalize pages – and you should. Content management systems (CMS) and dynamic websites often automatically add tags to content, and these tags can look to search engines like unique URLs. By self canonicalizing all of your pages, you’ll avoid this trap.

Finally, you may have duplicate http: and https: pages on your site. We’ll talk more about HTTPS and SSL in the “Page Experience” section, but for now you just need to know that it’s almost always preferable to canonicalize your HTTPS page over your HTTP page.

Redirects

There are times when canonicalization isn’t appropriate, but a particular page on your website is no longer unique and useful. Let’s say, for example, we’d published a comprehensive SEO guide in 2010. In 2020, we publish a new comprehensive SEO guide to account for all the changes SEO has gone through in 10 years.

We don’t necessarily want to get rid of our 2010 guide – we might have a lot of external and internal links pointing to it – but we don’t want to give our users outdated information.

That’s where a 301 redirect might come in handy.

A 301 redirect tells search engines that a particular URL has been permanently moved to a different URL. Any users who go to the redirected URL will be sent to the new URL instead.

301 redirects get used a lot in SEO. Some common uses for 301 redirects include:

- Automatically redirecting http: traffic to the https: URL

- Redirecting traffic when restructuring your website (perfect for cases where you’re changing from subdomains to subfolders)

- Merging content that’s competing for the same keywords

- Redirecting 404s (Page not found)

Though there may be some very technical corner cases where you don’t want to use 301 redirects, if you’re reading this guide, chances are you won’t encounter them. Opt for 301 redirects over other 3xx codes.

301 Best Practices

Just like with canonical tags, there are ways to keep your 301 redirects well-organized to simplify things for both you and search engines.

- Avoid redirect chains, i.e. Page 1 -> Page 2 -> Page 3. Instead, opt for Page 1 -> Page 3 and Page 2 -> Page 3.

- Avoid redirect loops, i.e. Page 1 -> Page 2 -> Page 1. This should almost never come up, but it’s valuable to check for nonetheless – it’s a user experience killer (too many redirects can prompt an error).

- Don’t add 301’d pages to your XML sitemap (more on that later)

- Replace other 3xx redirects with 301 redirects

- Fix any redirects to broken pages (404s, etc.)

- Redirect all http: to https:

Finding things like redirect chains can seem complicated, especially if you’ve got a large website. This is where tools like Screaming Frog can come in handy – they can automatically find bad redirects, 3xx status codes, loops, and other redirect issues. They’re wonderful diagnostic tools, as long as you know how to fix the problems when you find them – and now you do!

301 or Canonicalization?

There are times when two pages might be very similar, but not exactly the same – in these cases, you might wonder whether you should perform a 301 redirect or simply canonicalize a page.

As a rule of thumb, if you’re not sure which to use, opt for the 301. The cases we discussed in both sections are good examples of the use of each, so if those cases pop up for you, just refer to this guide!

Eliminate Useless Pages

There are, of course, cases where a page is actually useless. There’s no similar content, so a 301 isn’t appropriate – these pages should simply be eliminated (creating a 404).

Keep in mind that a page is not useless if it’s getting traffic – traffic is the whole point of SEO. If a page is getting a lot of traffic but you feel its content is outdated, create a new page with better content, and 301 the original page to the new content to keep that traffic.

There may be cases in which a page is useless to search engines, but still useful to your clients. These are the cases we’ll address in this next section:

Robots.txt

When search engines index a web page, it can show up in search results. There are plenty of pages you don’t want showing up on SERPs, from your own internal search engine results to staging pages. In this section, you’ll learn how to tell search engines what you want them to crawl.

In other words, you’ll learn how to control robots. That’s a pretty decent superpower.

Meta Robots and X-Robots

Robots.txt works on the directory or site level – meta robots, on the other hand, instruct robots on how to behave on the page level.

The meta robots tag should be placed in the <head>. The format is:

<meta name=“ ”, content=“ ”>

In the meta name, you can put robots (to inform all bots how they should behave), or a specific bot (like Googlebot).

While there are a variety of things you can do with meta robots, for this introductory guide we’ll focus on content=“noindex” and content= “nofollow”, conveniently known, respectively, as noindex and nofollow.

Noindex is useful in a ton of scenarios – according to Google, any web page you don’t want indexed should be tagged with noindex. That’s because Google can index pages without crawling them if they’re pointed to by links.

Internal search results are great pages for noindex. You don’t want to include them in your robots.txt, because you want Googlebot and other search engine crawlers to follow all of the links on your search page – it’s a great way for them to get a more complete inventory of your website. On the flipside, if I’m using a search engine, the last thing I want in the results is a link to more search engine results.

The nofollow tag tells bots not to follow any links on a given page. This tag is rarely used on the page level, because you can instead choose to nofollow specific links by following this format when linking:

<a href=”https://websiteurl.com” title=”Website URL” rel=”nofollow”>.

There are a ton of things you might want to nofollow, but you should know that two of the most common nofollow cases, sponsored links and user comments, have specific code: for sponsored links, you should use rel=“sponsored”, while for user generated content, you should use rel=“ugc”.

X-Robots can do all of the things meta robots can do and more – you can, for example, use X-Robots to block bots from crawling and indexing any pdf on your site. While using X-Robots for this type of work falls outside the scope of this beginner’s guide, you can check out Google’s guide to X-Robots-Tag.

One last note before we get off the topic of robots – some of you might have been using the noindex tag in your robots.txt file. Google no longer recognizes noindex in robots.txt, so you’ll have to manually noindex those pages.

You should also avoid including pages you don’t want indexed in your robots.txt. That might seem counterintuitive, but if a page can’t be crawled, search engines can’t see the noindex tag, and so they may index the page from links.

XML Sitemap

An XML sitemap is basically a text directory of the pages on your site that you want Google to crawl. You can submit an XML sitemap to Google using GSC – to create one, you can use Screaming Frog. Alternatively, if you have a relatively small website, you can use XML-Sitemaps (great if you’ve got a website with 500 pages or less and you don’t want to install Screaming Frog, which also has a 500 page free trial).

There may be pages that you don’t want crawled by Google – canonicalized pages, noindex pages, and the like. Screaming Frog allows you to automatically exclude these pages from your XML sitemap.

Sitemaps tells Google and other search engines that you submit the sitemap to, how to crawl your site. You might hear that your site doesn’t need a sitemap if it’s relatively small (500 pages or less), or if it has a comprehensive internal linking scheme.

Ignore the people you hear this from – maybe not in general, but at least on the subject of sitemaps.

It’s ridiculously easy to make a sitemap, and they’re a useful way to guide crawlers. You can, for example, exclude 301 and 404 pages from your sitemap. Crawlers, despite all appearances, aren’t an infinite resource, and if too many irrelevant pages are crawled and indexed, Google might not index the stuff you want it to.

Crawling is automatic, so you don’t need to update your sitemap every time you make a change (though you can). Instead, update your sitemap anytime there’s a major overhaul of your site, or use a program that automatically updates your sitemap.

Javascript and Indexing

For most pages, the indexing and crawling work of bots is simple – a bot crawls a page, then sends it for indexing. When links are found in the indexing process, the bot sends those links to be crawled.

With Javascript, however, another step is needed: rendering. As such, bots will crawl and index what they can, but it will take awhile before the bots bother to render the page. Again, bots are not an infinite resource, and rendering takes time. As such, pages heavy in Javascript tend to get indexed long after pages without Javascript.

For this and other reasons we’ll explore in the next section, there are some SEOs that operate under the belief that “Javascript is evil”. Whether or not you believe that’s an extreme stance, Javascript is necessary on many sites – it’s a matter of how it’s implemented, and how quickly you want pages on your site to be indexed.

Page Experience

The last thing we want to talk about is a set of signals Google calls “page experience”. Page experience signals are super relevant to your users, because they describe things like how quickly a page loads and how well it performs on mobile. While optimizing your page experience is out of the scope of this primer, I’ll take you through:

- What the signals are

- How you can check your performance

- Basics things to keep in mind for optimization

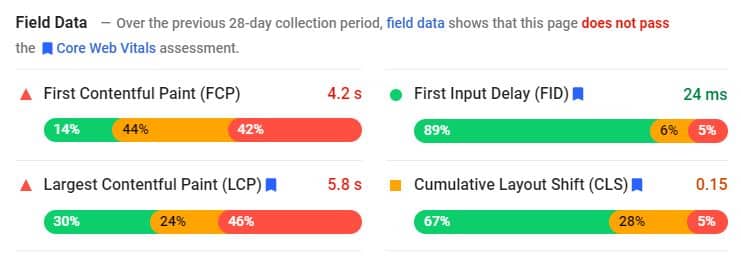

Core Web Vitals

Core Web Vitals (CWV) are the hot new commodity in the SEO world – Google is so excited about adding CWV to their ranking algorithm that they’re giving all of us several months notice before it goes live. May 2021 is the due date, so get your site ready!

CWV is made up of three different factors:

- Largest contentful paint (LCP), which evaluates loading speed

- First input delay (FID), which evaluates interactivity

- Cumulative layout shift (CLS), which evaluates visual stability

LCP

LCP is used to determine how quickly the main content of your page loads. There’s a lot you can do to reduce LCP loading times, from minifying your CSS and Javascript (Google has suggestions for minification tools) to using Content Delivery Networks.

The best way to optimize LCP, though? Reduce the amount of data that needs to be loaded. This can be done by compressing or simply removing content. Google has a bunch more tips to optimize LCP.

FID

Where LCP is used to determine when the main content of your page loads for users, FID determines when they can first interact with that content – more specifically, how quickly content responds to user interaction.

There will always be some delay before an interactive element responds to user input – reducing this delay is the goal. Delays are almost always caused by Javascript, and by optimizing how your Javascript is parsed, compiled, and executed, you can seriously improve your FID metric.

Lazy-loading (only loading content when users need it) Javascript is a great way to improve FID – it can also improve LCP if applied to images and videos. You can also simply reduce the amount of Javascript on your page – Javascript is render blocking, meaning that when Javascript is encountered, browsers need to stop what they’re doing and handle the JavaScript first.

All this to say, proper JavaScript management is key to both LCP and FID. You can read more in Google’s guide to optimizing FID.

CLS

GSC and PageSpeed Insights

Google really wants you to pay attention to your Core Web Vitals, as you might have guessed from the number of optimization guides they’ve provided and the name of the metric. As such, you can get insights about how a page is performing both through your GCS and through Google’s PageSpeed Insights. I highly recommend using them regularly.

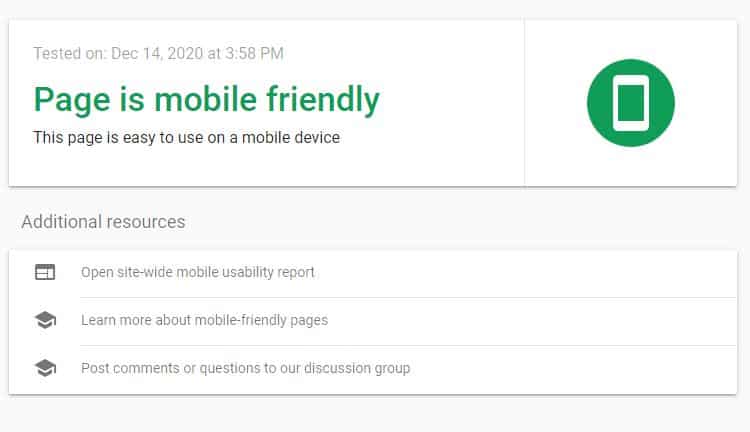

Mobile Friendly

The world is going mobile, and your website had better be mobile-friendly if you want to succeed in the SEO world.

There are a lot of tips for keeping your site mobile-friendly. You want your website to be responsive (Google offers a tool to check your mobile responsivness). You want to design with mobile users in mind – avoiding forms with too many fields, and keeping loading times low (mobile users tend to have poor loading speeds compared to PC users).

You’ll also want to avoid intrusive interstitials – aka pop-up ads. You know how annoying pop-up ads are – especially if you remember browsing the web in the late ‘90s-early 2000s.

While these pop-ups are acceptable in some circumstances, they should not substantially interfere with the browsing experience. It should be easy for a mobile user to dismiss a pop-up and keep scrolling.

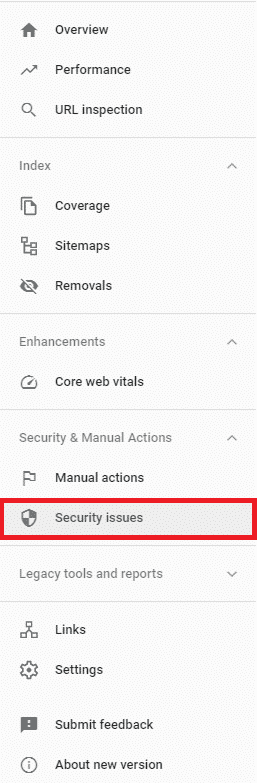

Website Security

Security is simple enough: don’t run a scam website, and get HTTPS certification.

On the first front, even if you’re not running a scam (I really hope you’re not), you might be flagged for security issues. You can look for security issues through GSC by clicking this button:

To be eligible for HTTPS, you need to get a SSL certificate. Fortunately, there are a lot of people who want the web to be safe to browse, so you can get an SSL certificate for free. Let’s Encrypt is a great place to start. You may already have certification and HTTPS – WordPress, for example, automatically provides HTTPS and redirects HTTP traffic to HTTPS.

Technical K.O

And with that, you can knock more technical SEO requirements off your list than 99% of website owners. While GSC and the other tools we’ve presented here can definitely help you find and fix technical problems, I can understand if this whole article was a bit complex.

Fortunately, if you think pouring all of this energy into technical SEO is going to be a bit too much effort, we can help. We’ve developed a free SEO audit tool – we’ll give you insights about how your site is performing from a technical perspective, free of charge. If you’re finding this all to much for you to handle, don’t worry at First Rank we have a team dedicated to this stuff, explore our Canadian SEO Services.